Whether customers realize it or not, AI’s effect on the customer experience is only making their standards get higher. We’re in an era where customer service needs to check a lot of boxes for customers to return, let alone leave a good review.

Customers are noticing the gap between leading and lagging experiences:

Getting a pulse check on your customer satisfaction is critical in understanding how your customer experience can improve, what’s causing your customers to stay or go, and if there are any gaps in your product or service that your customers want fixed.

That’s why CSAT is not only one of the most popular metrics in customer service but also one of the most hotly debated when it comes to reporting. CSAT isn’t perfect — it doesn’t necessarily capture how convenient or difficult it was for the customer to get help, nor will it capture every interaction the customer has with your team. The customer experience is complex, so it would be an oversimplification to sum up performance with just one metric.

Nonetheless, CSAT is still invaluable feedback, and when assessed alongside other KPIs like Customer Effort Score (CES), it can help teams understand where to iron out any kinks in the customer experience. Here, we’re taking a deep dive into how to get CSAT right, even with its evolving role with AI now in the mix.

The basics: How to calculate and measure CSAT

CSAT is a customer service metric that measures how satisfied a customer was with a recent experience they had with an interaction, product, or service.

The way CSAT is collected and measured depends on your industry, company size, customer base, and complexity of product/service. You’ll need to decide on a rating scale, how many questions to include, frequency, and method for collecting feedback.

Decide on a rating scale

CSAT rating scales vary with the options ranging from:

Thumbs up/thumbs down

Happy-neutral-unhappy emoji reactions

1-5 star scale

1-10 numerical scale

Your choice depends on the level of detail you want to get from your customers, while also not offering them so much choice that they abandon the survey.

For example, our support team at Front uses a 5-star scale because it’s simple, quick, and easy for customers to use and avoids the nuances lost with a binary rating system.

Identify what question(s) you want to ask

CSAT surveys can range from one question to several plus an open comment box. Feel free to experiment with length to see what gets the best completion rates from your customers. Here are a few example questions:

How satisfied are you with the service you received?

How would you rate your most recent interaction?

If there was one thing we could have done to make the experience better, what would it be?

Determine when and how you want to hear from your customers

There are two ways to gather customer feedback, and you can test what yields the best engagement rates with your customers.

Active customer feedback

Active customer feedback is when a company requests customer feedback on a recent service interaction. This can be done immediately after the customer interaction (aka transactional survey) or it can be a timed follow-up at 2-, 24-, or 48-hour intervals (aka drip survey). Anything beyond 72 hours might be difficult for customers as it’s ideal to get their feedback when their experience is still rather fresh in their minds.

Passive customer feedback

Passive customer feedback is when the customer chooses to provide their feedback on a recent service interaction. This feedback isn’t as structured as a CSAT survey, but can still be very valuable even though it could be spread out across various channels and formats. Some examples of passive feedback include:

Third-party review sites like Yelp, Google Reviews, G2, Capterra

Social media posts or comments

Support ticket data — the customer interactions that didn’t leave a review

Community forums

An “always-on” feedback option, whether that’s a link within your email signature or a widget on your website or app

What makes a great CSAT score?

Your overall CSAT score is calculated by taking the total number of CSAT surveys with positive ratings, like 4 or 5 stars, and dividing by the total responses. Multiply by 100 to get a percentage.

This percentage or score will commonly be in flux according to customer ratings:

90% and above is considered exceptional

80% to 89% is awesome

70% to 79% is good

60% to 69% is okay

50% to 59% is could be better

49% or below is worth doing a deep dive into the root cause

How often you report on CSAT depends on your business goals and expectations, but Front’s head of support reports on a monthly basis to leadership in addition to our public support metrics page, which updates daily to the 90-day average score.

The key takeaway from a CSAT score is that it can show a snapshot of whether or not you’re meeting your customer expectations. Businesses can use CSAT to understand how they can improve their customer interactions while reinforcing the positive experiences and minimizing the negative ones.

Pros and cons of tracking CSAT

While CSAT can help businesses understand how their customers feel about their customer experience, it also has some limitations.

Pros | Cons |

|---|---|

|

|

CSAT is a great starting point for measuring the customer experience, but to truly understand if customer needs are being met, brands may need to combine it with other metrics or qualitative insights, depending on how they define success.

DSAT: The other side of the CSAT coin

CSAT should always be assessed alongside customer dissatisfaction (DSAT). At Front, we consider any review with 3 stars or less as a DSAT. Calculate DSAT by taking all the dissatisfied survey responses, dividing by the total number of survey responses, and multiplying by 100 to get a percentage.

Standards for DSAT scores will vary by industry, but generally, you’ll want to keep DSAT under 30%.

Why do customers leave a dissatisfied score? Here are some reasons:

Slow response

Inefficient help: Resolution was drawn out over a long period of time

Agent performance: Rude, not helpful, or gave inaccurate information

Policy disagreements: Pricing, return, exchange, contract terms, etc.

Unhappy with the process: Difficulty finding help or requesting support, too hard to reach a human agent, etc.

Defective product or subpar service

Sometimes customers might leave a poor rating that’s outside of an agent’s control, like lack of product capability, or they simply did not like the answer despite the agent meeting all requirements, like responding within the service level agreement (SLA), offering accurate solutions, etc. Another limitation is when the rating is given without additional commentary, so it’s hard to understand what needs to be improved.

Categorizing each dissatisfied response can help identify trends in what the customer was unhappy about. It can also help inform whether or not you need to revise the CSAT survey with questions that could offer more clarity.

Either way, DSAT should be investigated as it presents valuable learning opportunities for understanding the customer. Front’s support team reviews every DSAT rating to dig into why the customer was unhappy with the support. However, given the external factors mentioned above, agents can request further review if they feel the feedback was a mistake.

Not all CSATs are the same

As businesses strive to meet customers where they are, they’re investing in more channels that each have their own specifications.

Compare by channel

Channel | Expected first response time |

|---|---|

Live chat | 37 seconds4 |

1 business day or less5 | |

Social media | Within 24 hours6 |

Customer expectations vary by channel, which is why many businesses approach and evaluate CSAT by respective channels. For example, because chats are usually fast and transactional, the customer may not be as open to a CSAT survey at the end of the chat but could be more open to a drip CSAT survey followed up by email. When CSAT is segmented by channel, businesses can make more targeted customer experience improvements in the various outlets they engage with their customers.

Compare satisfaction between human- and AI-driven experiences

Many businesses are paying particular attention to AI-led service so they can begin tracking the ROI on their AI investments. Customer service and support leaders are not only trying to quantify how well AI deflects simpler inquiries, but they’re also trying to understand the difference in the customer experience between AI- and agent-led service.

In order to understand the nuances between the two types of service, it’s recommended to evaluate queries resolved by humans versus those resolved by bots separately to drive more targeted improvements.

For example, the CSAT for bot interactions might now encompass more of the simpler inquiries. Was AI able to understand the customer inquiry correctly? Did AI provide the right help center article? If human assistance was required, was the transition fast and seamless? Businesses can then look back into their chat flows to see where the logic and flow could be smoother.

These assessments look different than the human-to-human interactions, which might now cover more of the complex inquiries. Did the agent respond within the SLA? Did the escalation occur because of a product bug and was the agent able to handle it with empathy? Were the right subject matter experts (SMEs) looped in and did they respond quickly? These insights enable businesses to dive deeper into what area — customer journey, product improvement, operations, etc. — should be refined.

What about when the customer inquiry was handled by AI first before getting handed off to an agent? Depending on the goals, CSAT can be attributed to a number of breakpoints. Some companies might choose to credit AI for resolving some inquiries within a chat before it needed to escalate a follow-up question to an agent. Others might credit the resolution to the agent who closed out the ticket. Regardless, each breakpoint invites the opportunity to dive deeper into how issues can be resolved more effectively.

Empowered team, better CSAT

Customer service teams are still the backbone of exceptional customer experiences. AI won’t replace human support; rather, it will allow humans to do what only humans can do best, whether that’s giving an empathetic response or getting down to the bottom of a highly technical problem.

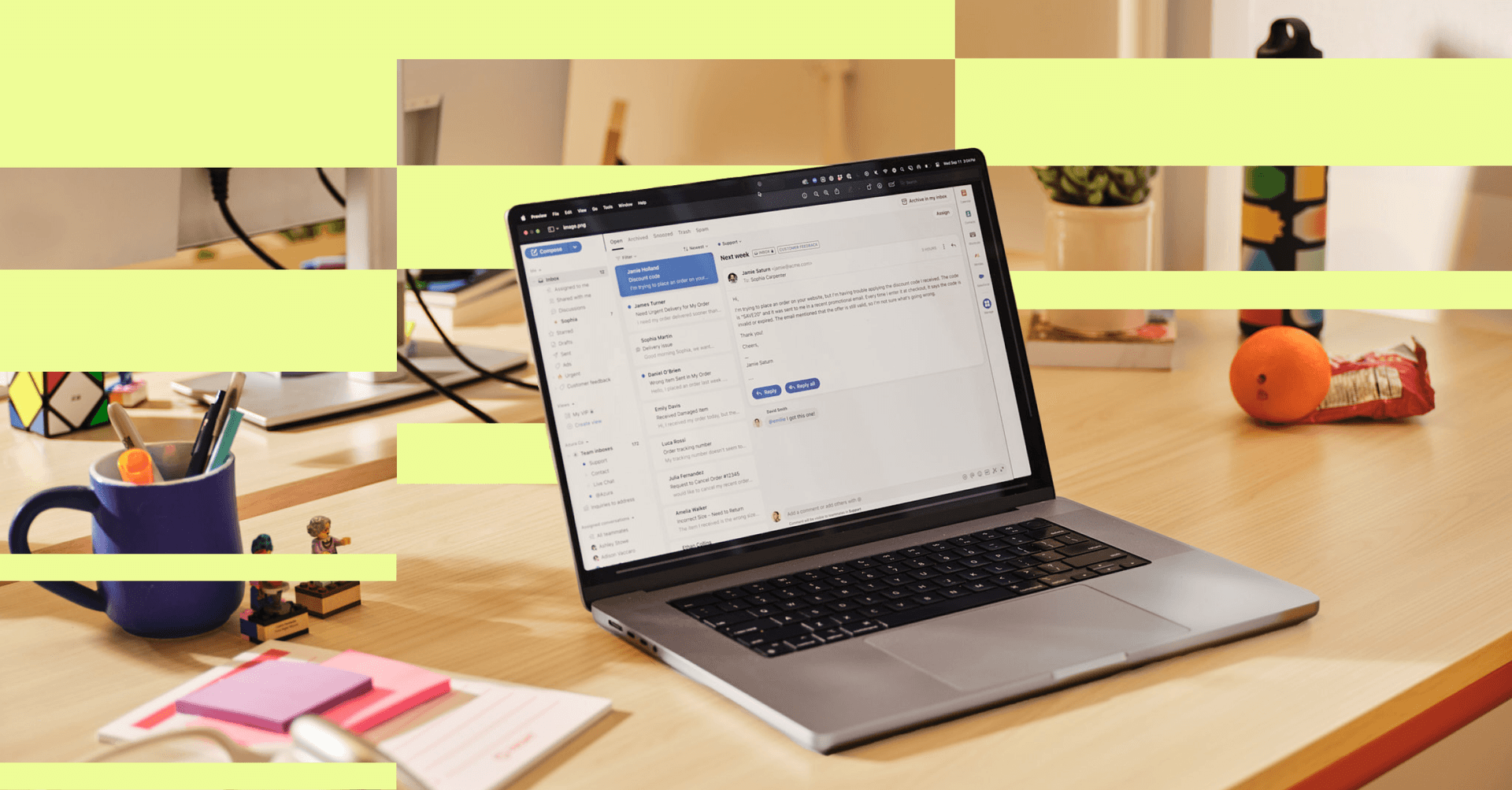

In order for agents to do their best work, they need to be empowered with the right tools and processes. That means easy access to customer data, real-time team collaboration, practical AI assistance, more automated workflows, and actionable metrics.

Those are the very same principles that allow Front’s award-winning support team to average 98% CSAT — 13 points higher than the industry average. To help your team achieve the same, we’ve recently made our support team’s playbook public.

guide: 99% CSAT Playbook: 5 steps for empowering agents in the age of AI

This playbook shows the five steps customer service teams can take to keep their CSAT scores on the rise even when customer expectations are at an all-time high.

Sources:

State of the Connected Customer. Salesforce.

ACA Customer Service/CX Study. Shep Hyken.

2023 Customer Service Report. LiveChat.

This Is Exactly How Long People Expect You to Take to Respond to an Email — and Why It Matters. Inc.

The 2023 Sprout Social Index™ Report. Sprout Social.

Written by Andrea Lean

Originally Published: 7 November 2024